Recording The Music Of The Lahu

Greg Simmons adds Neumann’s NDH20 headphones to his mobile production rig and ventures into the hills of Northern Thailand to record the music of the Lahu ethnic group.

This story began life as a paragraph in my field report for Neumann’s NDH20 closed-back headphones, but kept growing larger. It contains information that some readers will find useful or interesting but has little to do with the performance of the NDH20s in the field, so it’s been spun off as a separate story.

The NDH20 field report describes how I used Google Earth to identify a small cluster of villages belonging to the Lahu ethnic group in Northern Thailand, found accommodation on the side of a hill, and organised some recordings. This story picks up from there…

RECORDING SESSIONS

Three instrumental recording/filming sessions were planned. The first session was a wind instrument called the ‘nor’, the second session a string instrument called the ‘tue’, and the third session featured solo performances on the nor, the tue and a bamboo flute. My goal was to make ‘contextual recordings’, ie. to capture each instrument within the sonic context of the world it was made for – which means capturing enough of the background sounds to provide the appropriate ambience. It’s worth mentioning that many traditional instruments were designed to be played outdoors in noisy environments, and therefore lack the aesthetic refinements that evolve from centuries of performance in concert halls and decades of recording in studios. They often contain squeaks, buzzes, rattles and resonances that are barely audible in their traditional sonic context but are over-exposed when close-miked. To minimise those sounds and capture the right amount of ambience, I focus on recording ‘in situ’, at a sufficient distance to capture the instrument’s overall sound without getting hung up on the little things. I’ll do what’s necessary to make the recording fit the delivery medium (e.g. control the dynamic range to suit Youtube) and satisfy a Western aesthetic, but I try to preserve the ‘warts and all’ authenticity whenever possible.

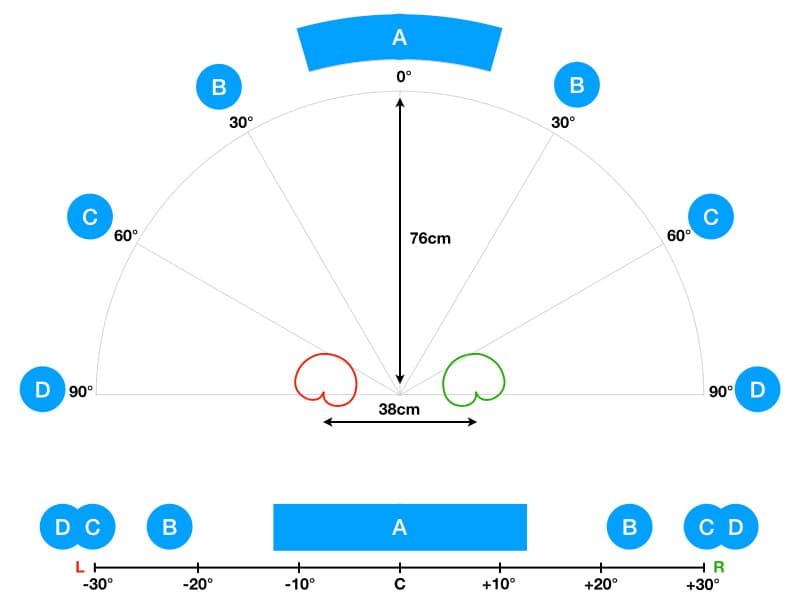

To satisfy these requirements I use a direct-to-stereo microphone technique based on a pair of directional microphones spaced at least 38cm apart and angled inwards to face the instrument. This technique aims to create a narrow image with a centre-focus for sounds arriving from in front (the instrument), but a wide image with a hole in the middle for sounds arriving from the sides and rear (the contextual ambience). The goal is to create a stereo recording with the instrument firmly front and centre, and the ambience enveloping it at the sides and rear where it is less intrusive. Further information about this technique is given later (see ‘Microphone Technique’).

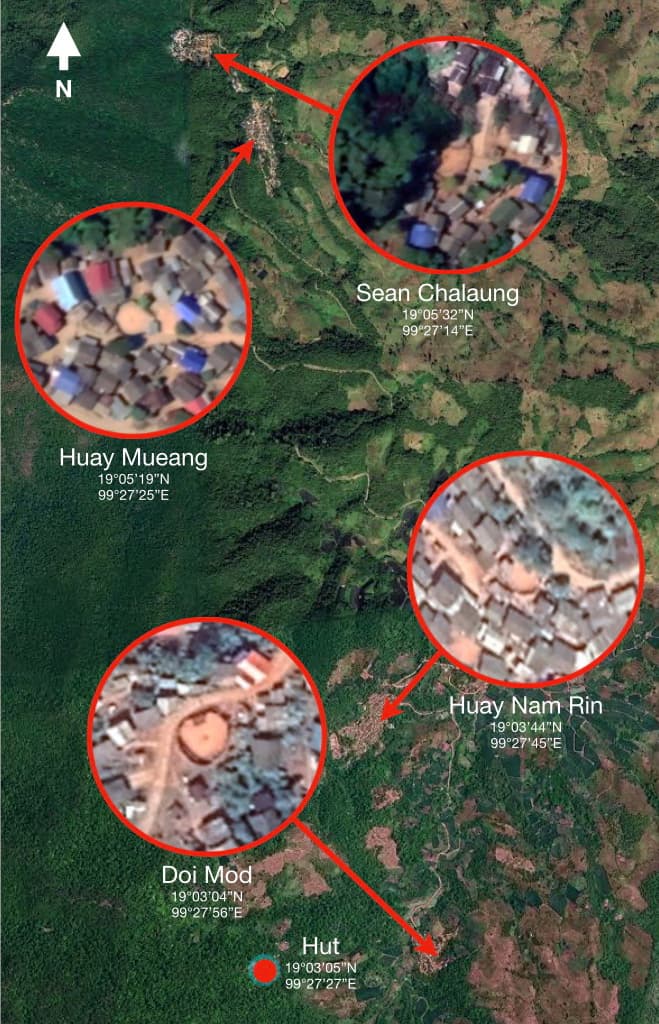

I also had the opportunity to record and film ceremonies in three different jakuh. A ‘jakuh’ is the traditional dancing ground of the Lahu ethnic group, and forms the community centre of every Lahu village. It’s a circular patch of compacted earth with a ceremonial mound in the centre, and is fenced off all around with tall wooden planks that provide acoustic sound reinforcement. A jakuh can be easily identified in satellite images from Google Earth, which is how I found the four villages visited during this recording expedition. The image below contains an overview of the area I visited, along with zoomed-in views of each village with its jakuh clearly visible in the centre.

RECORDINGS

The video below is the first piece of music from the first session, featuring Lahu musician Jalow playing the ‘nor’; a member of the ‘khene’ or ‘khaen’ family of free reed aerophones commonly seen in Northern Thailand and throughout Laos. This session took place on a wooden deck between two huts in the middle of the village, opposite the jakuh, with considerable background noise from roosters, chickens, kids, motorbikes, the chatter of curious locals, and, of course, the arrival of the obligatory beers. These sounds are mostly heard in the right channel, which is where all the external action took place. By the sixth piece of music Jalow had settled in to the recording process and was more animated, swinging the nor from side to side as he played. Due to the length of the nor’s pipes and the limited space I had to work in (I could not move any further back on the deck!) the image shifts for sounds that come from the end of the nor’s pipes are quite extreme.

The next video features Lahu musician Assam playing the ‘tue’ (pronounced ‘toong’, quickly and abruptly). This session took place on a deck behind a hut. Although it was a quieter location than the nor session, the lower volume of the tue compared to the nor means the relative level of the background noise is not much different between both sessions. The body of the tue is further to the left of the image than I would’ve liked, but the performance space for this recording was so narrow that there wasn’t any option for sideways movement of the mics or the musician. The most prominent noises in this session were from family members coming and going from the adjacent hut.

Thankfully, in the nor and tue examples presented so far the movement heard in the stereo image correlates with the movement seen in the visual image so it remains an acceptable outcome.

The following videos show Jalow and Assam talking about how their instruments are made. They were both recorded with the same microphone placement used for the tue recording shown above. Although the microphones were not re-positioned, they do a reasonable job nonetheless of capturing the dialogue between the musician and my translator Winai.

The third session featured Lahu musician Japua performing on the nor, the tue and a bamboo flute. This session took place in a bamboo hut on a relatively quiet day with few distracting sounds apart from a nearby rooster, some passing vehicles, and a dull pounding sound as neighbors prepared rice for the evening’s ceremonies. Compared to the previous sessions, on this session I had more room to work with. I was able to place the microphones far enough back for the technique’s desired result, keeping the instrument mostly in a narrow space in the centre of the stereo image. After achieving that, however, I decided to increase the spacing between the mics to make things more interesting and spatial. This introduced similar exaggerated image shifting as heard in the earlier recordings but minimised the distraction of external sounds even further. The image shifting is barely noticeable in this flute recording.

Based on the videos presented so far you could be forgiven for dismissing the music of the Lahu as simple and repetitive. Despite my best efforts at recording them in a village setting, my so-called ‘contextual recordings’ are inadvertently out of context. To capture the sounds in their true context, I needed to enter the jakuh…

INTO THE JAKUH

In the first two sessions (described above) I recorded six pieces from Jalow playing the nor and four pieces from Assam playing the tue. It all sounded very similar to me, and I wondered how the Lahu (or anyone else for that matter) could tell the pieces apart. The answer became a little clearer while recording in the jakuh, where I heard a missing ingredient…

In large ceremonies a musician performs on the nor, tue or flute while walking around the inner perimeter of the jakuh. The men of the village follow the musician hand-in-hand and stamp out an accompanying rhythm with their feet, while the women form lines radiating outwards from the ceremonial mound and move around it like the hands of a clock – supplementing the men’s foot stomping with a shuffling sound made by scraping their feet over the compacted ground. This can be seen and heard in the video excerpt below, which was taken during the Lahu’s celebrations for Chinese New Year in the village of Huay Nam Rin. The audio in this video was captured with the camera’s internal microphones, as explained in ‘Reflection’ below.

The following video, from the jakuh in the village of Doi Mod, is from a small family ceremony held in aid of an injured villager; it was a casual affair with only a handful of people circling the ceremonial mound. To record this I used a pair of omnis spaced 55cm apart, placed at the outer perimeter of the circle. It’s possible that I changed the polar responses to cardioid; I remember contemplating it but cannot remember actually doing it (there are a lot of things going on at once in these events). If I did change to cardioid I would’ve maintained the same spacing and kept the mics facing straight ahead, because forward-facing cardioids (0° angle between them) spaced around 55cm is a distant-miking technique that gives excellent imaging and depth when recording soundfields that are as deep as they are wide (e.g. ensembles performing in circular or square configurations), while rejecting sounds from behind the mics such as an audience or passing traffic. For sounds arriving from anywhere within ±45° from front-centre their imaging is almost identical to omnis spaced 55cm apart, but with the added benefits of rear rejection and adjustable focus (e.g. changing the vertical angle to achieve a better balance between front and rear sound sources). The downside, compared to using omnis, is the loss of low frequency energy due to the proximity effect over distance (as discussed in the first instalment of my on-going series about microphones). In situations where there are extraneous noise sources I’ll take the rejection of directional microphones over the extended low frequency response of omnis; compensating for a lack of low frequencies is easy, but removing unwanted sounds is not. (I added a low frequency shelf to enhance the foot stomps in this video, as discussed in ‘Post-Production’ below, which further supports the possibility that I switched to cardioid…)

In the first jakuh session (seen in the above video) I heard the music I’d previously recorded being performed in its traditional setting, with the rhythmic stomping and shuffling of feet adding a percussive element. Hearing the music with the foot percussion made a huge difference. Although the music sounds very similar to the unaccustomed listener, each piece of music has a unique pattern of steps (and therefore ‘foot percussion’) to accompany it, and the Lahu people fall into the correct step with no hesitation. In the following interview I attempted to find out how they knew which step pattern to use. I thought I understood it at the time of the video, but looking over it now I feel none the wiser! (Please remember that there were no subtitles at the time of the conversation so what seems obvious due to the subtitles was not obvious at the time!)

POST-PRODUCTION

The videos presented here have been selected from 26 videos made during my expedition into the Lahu culture. The audio in all of these videos has been through a streamlined mastering approach that allows me to quickly make acceptable videos that can be transferred to the musicians’ mobile phones within an hour or two after each session. It’s part of my iOS-based ‘on-the-spot-post-production’ approach explained in Going Further, but streamlined by changing DAWs from Auria Pro to Cubasis 3. Thanks to the benefits of time I have made some small tweaks to the videos presented here, but the sound is essentially the same as it was in the videos that I prepared immediately after the sessions.

With the exception of the video made in Huay Nam Rin (Lahu 26, above), all the recordings were captured direct-to-stereo as interleaved stereo wav files using the same mics (Sennheiser MKH800s) into the same field recorder (Nagra Seven), at similar recorded levels (averaging -20dB FS), and often in similar spaces. This means there are already many tonal, spatial and level similarities between each piece of music, which simplifies the downstream processing. All monitoring on location was done with Neumann’s NDH20 headphones at the same headphone volume setting which, combined with maintaining a consistent average level on the recording meters, further adds to the consistencies between files by ensuring all tone-related microphone placement decisions are made at the same monitored level.

All mastering for the videos was monitored through the NDH20s and cross-referenced through the internal speakers of my iPad Pro – an appropriate reference when preparing audio for social media. I felt no pressing need to apply any corrective tonal EQ during monitoring or mastering (apart from the subtle tweaks that Bark Filter applied dynamically, and a subtle low frequency shelf as described below), and was pleased by how well the captured sound translated through the iPad Pro’s speakers as well as my iPhone’s speakers, my AudioTechnica M50X headphones, my Etymotic ER4s canal phones, and my Bose QC35 noise-cancelling headphones. Considering the minimal amounts of audio processing applied to these recordings, this broad translation is a good indicator of the reliability of the NDH20s as monitors on location when choosing microphone placements.

At the end of each session the audio files were loaded directly into Cubasis 3 from the Nagra Seven’s SD card. Cubasis 3 offers direct access to the SD card from within the app (rather than going through an intermediary I/O app like AudioShare), accelerating and simplifying the process considerably. Furthermore, the Nagra Seven allows a file naming scheme (with detailed text and incremental numbering) to be set up before the session; if I get that right, the imported files will appear as sensibly-named regions in Cubasis 3.

I have a session template that includes a stereo track for each recording, and each stereo track has a limiter and EQ installed as pre-fade inserts. I rarely use these but the limiter is handy for unruly peaks such as fireworks, and the EQ is handy for replacing the low frequencies that are lost when using directional polar responses at distances greater than a metre or so. The goal, however, is to stick to global ‘one size fits all’ processing as much as possible and avoid getting finicky with individual files. It’s all about fast turnaround, favouring pragmatism over perfectionism…

The session template has VirSyn’s Bark Filter over the main output, and it does most of the tonal work for me. It’s a perceptual dynamic EQ with 27 individual bands based on the Bark scale (a psychoacoustic scale named after Heinrich Barkhausen, who proposed the first subjective measurements of loudness). Think of it as the audio equivalent to the Auto function in Apple’s Photos app; what comes out usually sounds better than what goes in, and that’s before I’ve touched the controls. It’s ‘magic’ in the Arthur C. Clarke sense of the word.

Speaking of magic… During the first two takes of Assam’s tue session I noticed a strong resonance somewhere around 350Hz that caused a particular note to jump out, making a ‘doonk’ sound that soon became annoying. Moving the microphones around made no difference; taking off the headphones revealed it was an inherent sound of the instrument that was audible at any useful microphone location. This was one of those situations where the raw ‘warts and all’ authenticity I was aiming for conflicted with the Western listening aesthetic. I had intended to isolate the offending frequency and dip it out during post-production, hoping to find a ‘middle ground’ between authenticity and the Western aesthetic. As it turns out, Bark Filter identified and rectified this problem with its default settings and, being a dynamic EQ, only ducked it out when and as much as necessary. Abracadabra!

After Bark Filter, a very slight touch of Steinberg’s Loudness Maximizer (part of Cubasis 3’s Mastering Strip) tidied up the note-to-note dynamics, making the musicians sound more controlled while allowing me to push the perceived level a little closer to Youtube’s optimum of -14 LUFS.

Loading the individual pieces of music as parallel stereo tracks in the session file and monitoring one at a time allows me to quickly jump between pieces, adjusting the channel faders to control relative volumes while also determining how hard each piece is hitting Bark Filter and the Loudness Maximizer on the output. My goal is to get relatively high and consistent audio levels so the videos can be heard when played back from a mobile phone in a village setting, without sounding over-compressed or feeling detached from the video. Having Bark Filter and Loudness Maximizer across the stereo bus means each channel fader (ie. each piece of music) has a dB or two of leeway where I can get more or less processing without making a significant difference to the perceived level between pieces of music. You wouldn’t get away with it when mastering an album, but it’s fine for a collection of videos destined for a Youtube playlist.

(Dubious Pro Tip: the fastest way to match the perceived volume of two different pieces of music is to play them simultaneously; the louder one will dominate the mix, and the balance can be adjusted accordingly. Note that the success rate of this technique is on par with the success rate of Anchorman’s ‘Sex Panther’ cologne: 60% of the time, it works every time.)

The mastered audio tracks are then transferred into a LumaFusion template where they are manually synced with the video files from my cameras using the ‘three claps’ method. I’ll do a bit of colour and lighting work on the video footage if necessary but I don’t take the visual stuff too seriously; I’ll usually jump straight into whichever LUT looks close enough and tweak one or two settings. The video is just a social media wrapper for the sound, so I don’t waste too much time on it. There’s always time to refine things later, but in most cases I’m happy with what I settled with on the day.

MICROPHONE TECHNIQUE

The three sessions mentioned earlier (nor, tue and flute) took place in different locations throughout the same village – Doi Mod – but all were close to the main thoroughfare. It is rarely quiet in a village, so to control the surrounding noise levels I used a stereo technique I devised specifically for recording solo instruments or voices in noisy places: two spaced directional microphones forming an isosceles triangle with the sound source, angled inwards to face it, with the distance from the mics to the sound source being considerably longer than the distance between the mics. For the Lahu sessions I used cardioids, as seen in the illustration below. I have used the same technique with bidirectionals when recording the Moken (Sea Gypsies of the Andaman Sea), and with hypercardioids when recording the Phou Noi (‘small people’) and Lanten (‘cloth dyers’) of Northern Laos.

The goal is to create a stereo recording of the sound source but with most of the surrounding noises pushed out to the sides and/or considerably reduced in level. It’s based on a combination of a Faulkner two-way array and a technique used for recording opera singers in the same space as the orchestra. It also borrows from the ‘two-handheld-mics’ approach historically used by ethnomusicologists when mic stands are not practical – many of which turn out remarkably well under the circumstances.

When I get it right, this technique creates a focused stereo image on the sound source itself, keeping it reasonably well-centred so that side-to-side movements are not exaggerated across the stereo image and don’t cause any significant off-mic tonal changes. The strong on-axis correlation between the left and right channels (due to having both mics’ axes aimed at the sound source) provides additional gain over sounds arriving from other directions.

I aim for a minimum spacing between the mics of around 38cm; this provides an inter-channel time difference of just over 1ms for sounds arriving from 90° to the mic array, placing those sounds out to the extreme sides of the stereo image to ensure they do not conflict with the sound source in the centre. [1.12ms is the minimum time difference required between channels to push a sound to the extreme left or right side of the stereo image, assuming the amplitude is the same in both channels.] However, because the microphones are directional there will also be inter-channel amplitude differences that will influence the location of sounds in the stereo image, depending on the polar response and angle of incidence. Sometimes it is necessary to use a smaller spacing than 38cm between the mics, which doesn’t push unwanted sounds out to the side extremes as well but does keep the sound source in the centre of a narrow stereo image.

The result should be a stereo image with the sound source ‘spot-lighted’ in the centre and most of the other sounds out to the sides, where they become contextual ambience rather than distractions. When possible, I try to situate the sound source so there is a wall behind it; this helps to minimise the amount of external noise entering the microphones from the front/centre that would otherwise get into the sound source’s space in the stereo image, and in some cases also provides acoustic reinforcement that helps increase the relative level of the sound source. Similarly, I am usually standing quietly behind the microphone array to operate my camera, in which case my body serves as a baffle to sounds arriving in the centre from behind.

IMAGING

The goals of the stereo microphone technique described above are a) create a relatively accurate centre image for the sound source, b) push extraneous sounds out to the sides of the stereo image where they are less intrusive and c) apply enough rejection (via polar response nulls) to render unwanted sounds unobtrusive.

A pair of monitor speakers configured in the recommended equilateral triangle placement with the listener reproduces a sound stage that covers a range of 60° from hard left to hard right. If the centre of the monitoring system is defined as being 0°, then an image panned hard left would appear at -30° and an image panned hard right would appear at +30°.

The Franssen analysis below shows how the microphone array responds to sounds arriving from 0° to ±90°, based on a pair of cardioids spaced 38cm apart and at a distance of 76cm from the sound source (measured from the central point between the mics). In this example the spacing between the mics is relatively small compared to their distance from the sound source (38cm vs 76cm), each mic is angled inwards to the front at 14°, and the calculations for the analysis were made at a sound velocity of 344m/s (corresponding to an air temperature of 21°C).

Sounds arriving within ±15° of centre (A) are reproduced within the front ±12° of the stereo image, creating a relatively accurate and ‘close up’ impression of the sound source. Sounds arriving at ±30° (B) are reproduced at around ±23° in the stereo image. Sounds arriving from beyond ±30° start moving further out to the sides of the stereo image, and at ±60° and beyond (C and D) they are reproduced hard left and right.

Predicting the location of images arriving from beyond ±90° becomes difficult due to the inward angling of the microphones. For example, if using cardioids as shown here, a sound arriving from 120° on the left side arrives at the left microphone before the right microphone, creating an inter-channel time difference that pushes the image towards the left side. At the same time, however, the right microphone captures the signal at a significantly higher amplitude, creating an inter-channel amplitude difference that pulls the image to the right.

This theoretical example becomes very interesting at around 150° to the centre of the stereo pair, where the sound arrives at 180° off-axis to the left microphone and 125° off axis to the right microphone, with only 2dB difference between them due to the inverse square law. In a theoretical model using cardioids, the signal arrives into the rear rejection null of the left microphone and is rejected infinitely, placing it to the hard right side of the stereo image – although with about 14dB of attenuation due to arriving 125° off-axis to the right microphone. In practice, however, the rear rejection of a cardioid isn’t infinite, and rarely exceeds 20dB. Factoring in the inverse square law, the end result is a signal with an inter-channel amplitude difference of approximately 4dB that pulls the signal 10° to the right, but an inter-channel time difference of about 1.5ms that pulls it to the far left.

The result in all cases beyond ±90° are images that are located somewhere between the speakers, with no guarantee of their spatial accuracy or movement, but at considerably lower amplitude than if they were arriving from the front ±90°. In this application those sounds will be the typical village sounds that are not part of the music (roosters, chickens, kids, motorbikes, etc.), and reducing their audibility (as the technique does) is more important than localising them correctly.

Interestingly, if a video camera with 16:9 aspect ratio and a low aperture is placed on axis with the centre of the microphone array, aimed to put the performer in the centre of the screen and adjusted so that the microphones align with the outside borders of the image, the movements of the audio image correlate well with the movements seen in the visual image.

SETTING UP

My approach to microphone setup in these village sessions is, by necessity, methodical and fast. A fast setup is important when working with musicians who don’t understand why it takes longer than three minutes to record a three minute song, or who need to get back to the fields after the recording. If the setup takes too long the performers can get bored, frustrated or feel that they are running out of time, resulting in boring, unfocused or rushed performances.

I start by choosing the most appropriate polar response, based on knowing roughly what angle the mics will be at (I know they’ll be facing the sound source from somewhere in front of it) and where the most disruptive noise sources are located. The goal is to choose a polar response that will have its null(s) aimed at the most disruptive noise source when the fronts of the microphones are aimed at the sound source.

With the polar response chosen, I place both microphones side-by-side on the stereo bar with the minimum possible distance between them, facing the sound source and tightened into position. Monitoring in headphones, I move the array backwards, forwards, up and down, keeping the mics aimed at the sound source until I find the distance, height and vertical angle that provides the best tone while capturing enough background sound for contextual ambience without being distracting. With the array now set up in that position, I loosen off the mics, grip one in each hand and slowly slide them apart along the stereo bar, angling them subtly inwards as they move further apart to ensure each one remains focused on the sound source.

When a spacing that provides the desired stereo image is found, I check in mono to make sure the instrument or voice remains the dominant sound and that there are no significant comb filtering issues. If the sound source gets weaker in mono than it is in stereo it means the mics are too far apart, and the balance probably won’t translate well to speaker playback. If there are any significant comb filtering problems I’ll tweak the spacing a couple of centimetres to minimise them. After checking for mono compatibility, I’ll tighten the mics into position on the stereo bar and get on with the recording.

If the set up has gone quickly, I’ll make a short test recording while checking levels and give the musician a listen to playback. (This, of course, relies on having a set of headphones that you can easily pass around – which was one of the motivating factors discussed in my field report of Neumann’s NDH20 headphones.) It’s amazing how much this can improve the end result. Sometimes the musician will change their playing technique for an improved sound, ask a neighbour to re-locate a noisy rooster, or similar things. These actions are a reminder that most of these musicians have never been recorded properly before, and hearing themselves played back through decent headphones is surprising and inspiring. They’re not used to microphones that can hear people talking in the hut next door, and once they hear how good they sound they’re often keen to minimise distracting sounds. It’s always fun watching them look around for a distracting sound that’s nowhere to be seen, such as a passing motorbike, before realising it’s actually in the recording.

ISOLATION & DAMPING

The microphones in my recording rig are a matched pair of Sennheiser MKH800s. With five different polar responses, high immunity to humidity and weighing just 135 grams each, they’re ideal for my purposes. Being so light, however, makes it difficult to provide decent isolation from physical vibrations. When mounted in Sennheiser’s supplied shock-mount they wobble around with the slightest movement or breeze – especially when using the lighter weight cabling found in multicores, which does not provide as much damping as a normal microphone cable. Wobbling microphones is never a good thing during a recording, and even worse when they’re wobbling independently while being part of a stereo pair.

To overcome this I use standard microphone clips to hold the mics securely to the stereo bar, and isolate the entire rig instead – mics, stereo bar, stand and stereo cable. The combined mass is considerably heavier and therefore easier to isolate and dampen without leaving the microphones wobbling in the breeze.

I use three small foam blocks, about 10cm square and 4cm thick, with one placed under each foot of the microphone stand or tripod (note that a camera tripod offers greater stability than a microphone stand, and is particularly advantageous on uneven ground). A length of gaffer tape has been wound around the outside edge of each foam block to control the tension on the foam and thereby ensure it is in a springy/shock-absorbing state when the weight of the microphone rig is on it, so it can do its job properly. Typically, the foam should be compressed just enough that it acts like a slow-motion shock-absorbing spring when the microphone rig is nudged or bumped. If the foam is so soft that it is fully compressed, or so firm that it is not compressed at all, it is essentially a solid and will not provide the required shock-mounting.

The stereo microphone cable is isolated by leaving an unobstructed loop (like one coil of a spring) between where the cable leaves the stand and where it meets the floor. Any physical vibration entering the cable from the floor should be absorbed by expansion and contraction of the loop and shouldn’t cause any sound issues.

This approach works very well, but it’s useless when you forget to pack the foam blocks – something I managed to do on all three of the instrument recording sessions. Doh! As a consequence, you will undoubtedly hear some low frequency bumps coming up the microphone stand as people move around on the unstable floors. It is particularly noticeable during the interviews, where a lot of moving around takes place behind the scenes. Thankfully none of the recorded instruments contained any significant low frequency content so these problems should be easily fixed with a high pass filter, judicious use of Rx, or a few dB of forgiveness.

BACK TO THE VILLAGE…

To accurately represent the Lahu’s music meant that I needed to record it in the jakuh, along with the foot percussion and the acoustic reinforcement of the surrounding wall. As the celebrations for Chinese New Year approached I planned to place my microphones on the ceremonial mound in the centre of the jakuh – only to learn that the ceremonial mound is a sacred place and not intended for microphone stands or tripods. I also learnt that there would be random fireworks going off throughout the day and night, from different locations around the jakuh and throughout the village, that would ruin the type of recordings I had in mind and defeat the purpose of taking high quality microphones. So to capture the Chinese New Year celebrations I took only my cameras, capturing the sound through their in-built microphones and relying on their internal filtering, limiting and whatever other processing they used to deliver an acceptable result. The brief but colourful video from inside the jakuh at Huay Nam Rin, shown earlier (Lahu 26), was captured this way with the Insta360 One X camera.

I plan to return to the Lahu villages in the near future with an ambisonic microphone rig and 360 camera, and discuss the possibilities with the village head men. If I cannot mount the mics and camera on the ceremonial mound then perhaps I can suspend them above it, fit them to a musician or dancer, or maybe even join the procession around the ceremonial mound. My preference is for a central placement because, among other things, it will put the microphones much closer to the musicians and dancers than to the rest of the village, keeping the impact of external noises at a distance – such as the persistent chatter of locals at the perimeter, as heard in the jakuh videos above. There are a few options to explore…

In the meantime, I will leave you with this moment of conversation where Winai offers Japua some spirited advice for putting the ‘tue’ into ‘tune’. Enjoy!

RESPONSES